Alyze's power in a crawler

Alyze's SEO crawler is based on an enriched version of Alyze's page analyzer widely used in the SEO community since 2008. Unlike the page analyzer, the crawler is able to follow all the links on your site as a search engine robot would. It produces an analysis of your entire website where technical problems, SEO weaknesses, poorly adapted structures, etc. are easily detected.

How does it work? All Alyze members can scan 50 pages for free every month. While this may be enough for very small sites, it is mainly a way to perform a first test of the service. To go further, you need to subscribe to one of our Premium offers. There is something for all budgets (from 7 € / month)!

Our offersAt a glance 😉

Alyze's crawler:- lists all the internal and external pages of a site,

- detects SEO errors, broken links, duplicate content, and more,

- helps you to understand and fix the problems detected.

Alyze's SEO crawler is in Beta.

What limits? What needs?- For the moment, Alyze's SEO crawler does not interpret JavaScript. It does not work correctly on 100% JS websites (except prerendering)

- Site analytics works ideally on sites with less than 10,000 pages, beyond that we still lack tests.

What is a web crawler?

Search engines like Google use robots to crawl and index billions of pages. These robots are called web crawlers. They connect to a website and then move from page to page, following the links on each page, in order to discover an entire website. On each page, they collect all relevant information: content, structure, keywords, structured data, dates, comments, etc. As they continue this "crawling" progression (hence the term crawler ), they eventually get a good idea of the whole site, the subjects it deals with, the quality of its content, its internal meshing, etc.

All the data collected is then used by search engines to determine a site's referencing and the ranking of each of its pages. That's why it's essential to integrate the use of an SEO crawler into your digital marketing strategy! Unlike search engine crawlers, an SEO crawler is not designed to feed a search engine's database. Its purpose is to help you better understand how a website is viewed by Google and other search engines. In this way, it helps detect errors that could penalize your SEO. In practice, periodic crawls are essential to identify a site's weak points and ensure its long-term visibility.

Optimise your website with the Alyze SEO crawler

A powerful tool to boost your referencing

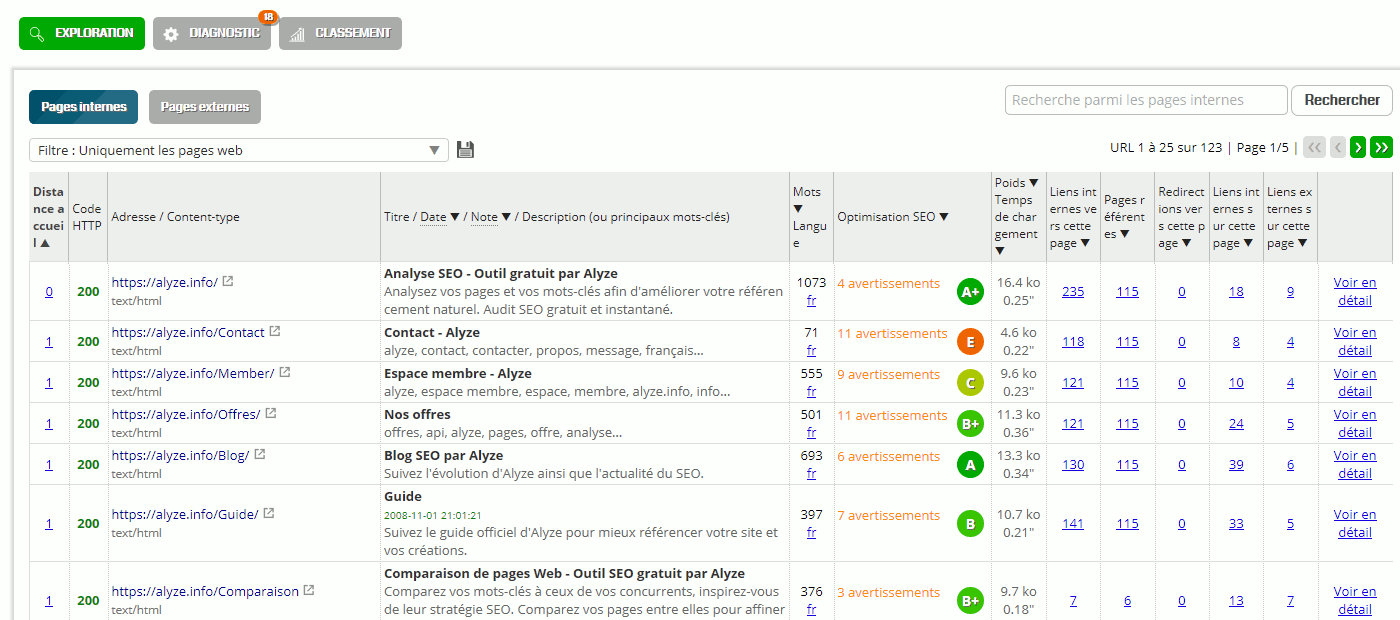

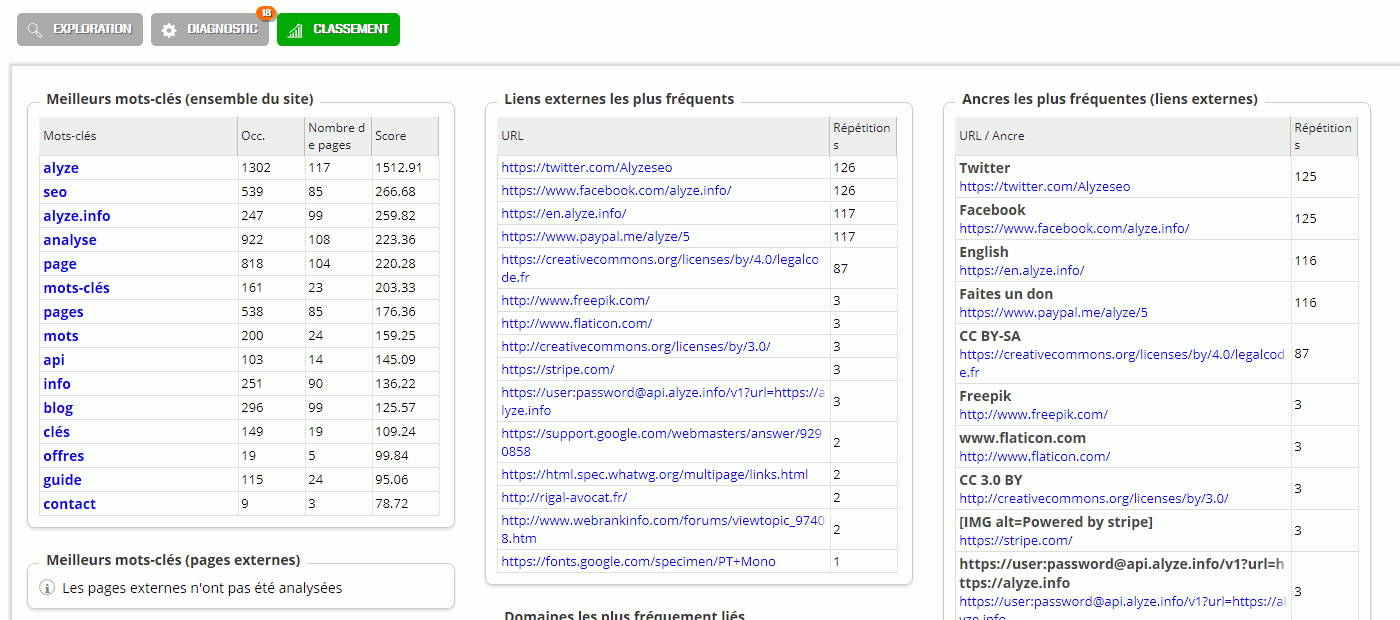

Like the search engine crawler, the Alyze crawler analyzes all the data it finds on a page before tracking its internal and external links. It is therefore able to present a site's entire internal mesh, as well as the various errors encountered during the crawl.

The content of each page is analyzed in detail: structure, weight, meta tags, keywords, title, description, internal links, external links, etc., to quickly identify weak pages or pages in need of reworking. Canonical URLs and redirects are taken into account to give an overall view of the site's internal mesh. The indexing guidelines in the robots.txt file and meta robots tags are also taken into account.

Tools make it easier to exploit the data returned by each crawl, notably via a highly effective keyword search system and page ranking by degree of SEO optimization or by date (taking structured data into account)

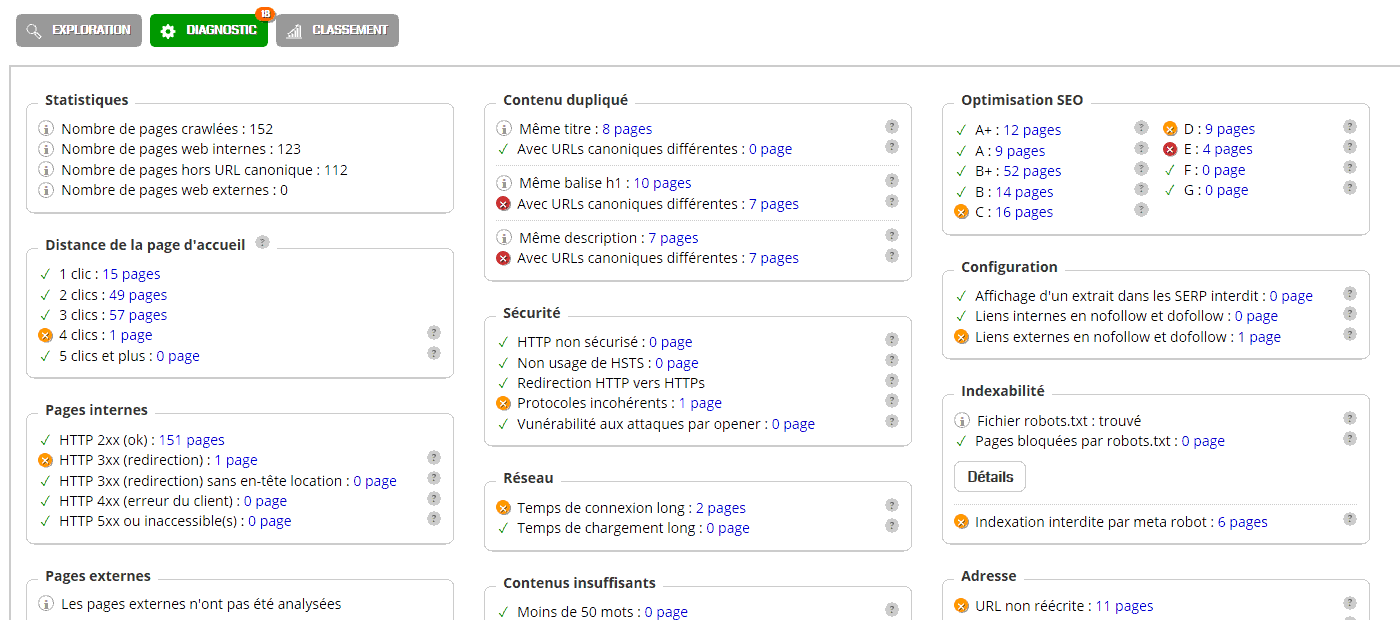

Over 100 SEO errors detected

It's impossible to list all the problems this tool can detect - there are over 100! Not to mention the ideas that are put forward in the form of proposals. Here, however, is an overview of the most important aids for pleasing Google:

- HTTP 404, 500, etc. Error Detections

- Duplicate contents

- Audit of poor-quality pages or content

- Impossible or reduced indexing by meta tags robots

- Good use of HTTPS and HTTP protocols

- Redirections with and without www

- Page without description, without balsie h1, with badly adapted title, etc.

- Need to rewrite certain URLs,

- And many more!

Simple, effective help for your digital strategy

Our SEO crawler comes with help designed to help you see the results of the analysis for yourself. You'll be able to see for yourself what errors need to be corrected and what optimisations need to be made to please Google and the other search engines. In this way, you can put in place the most useful measures for each URL to ensure that your website is better referenced.

Crawl export modules in PDF and XSLX format allow you to share the results of your SEO audit with all interested parties. Whether it's your customers or your website developers, they can even benefit from direct access to the web version of the site analysis if you so choose.